GPT Cache可以实现什么效果

两个问题:

- question1 = “what do you think about GPT”

- question2 = “what do you feel like GPT”

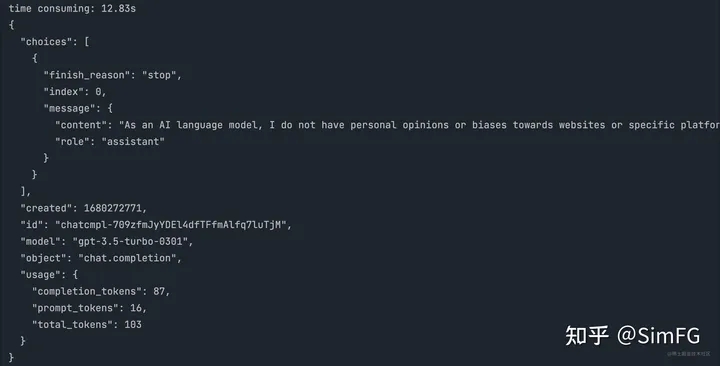

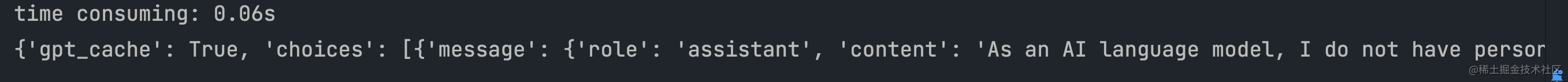

第一次将会去询问GPT答案,测试消耗12s,第二次命中缓存,只需要0.06s。

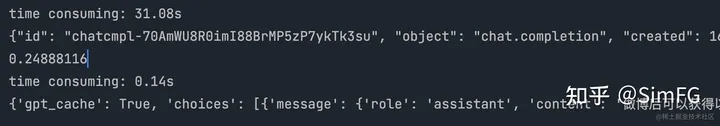

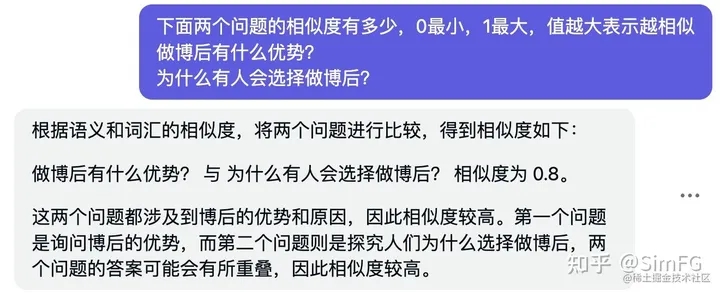

中文问题:

测试注意修改模型:

towhee = Towhee(model="uer/albert-base-chinese-cluecorpussmall")- question1 = “做博后有什么优势?”

- question2 = “为什么有人会选择做博后?”

第一次将会去询问GPT答案,测试消耗31s,第二次命中缓存,只需要0.14s。

如果觉得还不错,欢迎 star,项目地址:https://github.com/zilliztech/gptcache

什么是GPT Cache?

大型语言模型(LLMs)是一种有前途和具有变革性的技术,近年来迅速发展。这些模型能够生成自然语言文本,并具有许多应用,包括聊天机器人、语言翻译和创意写作。然而,随着这些模型的规模增大,使用它们需要的成本和性能要求也增加了。这导致了在大型模型上开发GPT等应用程序方面的重大挑战。

为了解决这个问题,我们开发了GPT Cache,这是一个专注于缓存语言模型响应的项目,也称为语义缓存。该系统提供了两个主要的好处:

- 快速响应用户请求:缓存系统提供比大型模型推理更快的响应时间,从而降低延迟并更快地响应用户请求。

- 降低服务成本:目前大多数GPT服务是基于请求数量收费的。如果用户请求命中缓存,它可以减少请求数量并降低服务成本。

GPT缓存为什么会有帮助?

我认为是有必要,原因是:

- 局部性无处不在。像传统的应用系统一样,AIGC应用程序也面临类似的热点问题。例如,GPT本身可能是程序员们热议的话题。

- 面向特定领域的SaaS服务,用户往往在特定的领域内提出问题,具有时间和空间上的局部性。

- 通过利用向量相似度搜索,可以以相对较低的成本找到问题和答案之间的相似关系。

快速接入

项目地址:https://github.com/zilliztech/gptcache

pip 安装

pip install gptcache如果只是想实现请求的精准匹配缓存,即两次一模一样的请求,则只需要两步就可以接入这个cache !!!

- cache初始化

from gptcache.core import cache

cache.init()

# 如果使用`openai.api_key = xxx`设置API KEY,需要用下面语句替换它

# 方法读取OPENAI_API_KEY环境变量并进行设置,保证key的安全性

cache.set_openai_key()- 替换原始openai包

from gptcache.core import cache

# openai请求不需要做任何改变

answer = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "foo"}

],

)如果想快速在本地体验下向量相似搜索缓存,(第一次运行需要等一定时间,因为需要下载模型环境、模型数据、依赖等),参考代码:

import json

import os

import time

from gptcache.cache.factory import get_data_manager, get_si_data_manager

from gptcache.core import cache, Cache

from gptcache.encoder import Towhee

from gptcache.ranker.simple import pair_evaluation

from gptcache.adapter import openai

def run():

towhee = Towhee()

# towhee = Towhee(model="uer/albert-base-chinese-cluecorpussmall")

os.environ["OPENAI_API_KEY"] = "API KEY"

cache.set_openai_key()

data_manager = get_si_data_manager("sqlite", "faiss",

dimension=towhee.dimension(), max_size=2000)

cache.init(embedding_func=towhee.to_embeddings,

data_manager=data_manager,

evaluation_func=pair_evaluation,

similarity_threshold=1,

similarity_positive=False)

question1 = "what do you think about GPT"

question2 = "what do you feel like GPT"

# question1 = "做博后有什么优势?"

# question2 = "为什么有人会选择做博后?"

start_time = time.time()

answer = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "user", "content": question1}

],

)

end_time = time.time()

print("time consuming: {:.2f}s".format(end_time - start_time))

print(json.dumps(answer))

start_time = time.time()

answer = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "user", "content": question2}

],

)

end_time = time.time()

print("time consuming: {:.2f}s".format(end_time - start_time))

print(answer)

if __name__ == '__main__':

run()实测输出:

time consuming: 12.83s

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "As an AI language model, I do not have personal opinions or biases towards websites or specific platforms. However, I can say that GPT is a platform designed for interactive communication where users can connect with others and have conversations on various topics such as lifestyle, relationships, health, and personal growth among others. It could be a useful tool for individuals seeking support or advice from peers, or just for casual conversations to pass the time.",

"role": "assistant"

}

}

],

"created": 1680272771,

"id": "chatcmpl-709zfmJyYDEl4dfTFfmAlfq7luTjM",

"model": "gpt-3.5-turbo-0301",

"object": "chat.completion",

"usage": {

"completion_tokens": 87,

"prompt_tokens": 16,

"total_tokens": 103

}

}

time consuming: 0.06s

{'gpt_cache': True, 'choices': [{'message': {'role': 'assistant', 'content': 'As an AI language model, I do not have personal opinions or biases towards websites or specific platforms. However, I can say that GPT is a platform designed for interactive communication where users can connect with others and have conversations on various topics such as lifestyle, relationships, health, and personal growth among others. It could be a useful tool for individuals seeking support or advice from peers, or just for casual conversations to pass the time.'}, 'finish_reason': 'stop', 'index': 0}]}可以发现,第一次请求消耗12s,类似的问题,第二次问则命中缓存,只需要0.06s。

© 版权声明

文章版权归作者所有,未经允许请勿转载。

相关文章

暂无评论...